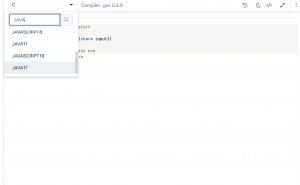

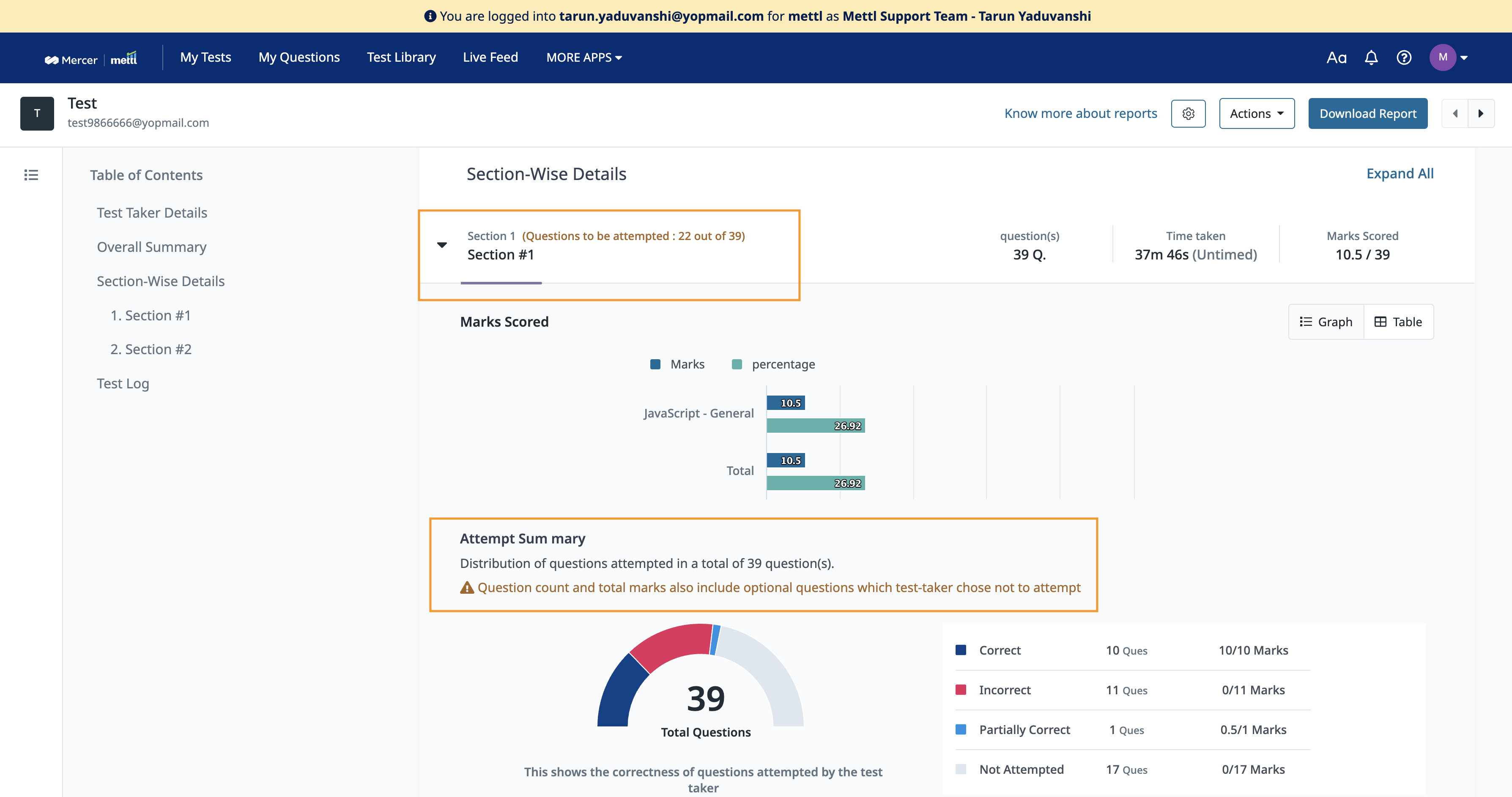

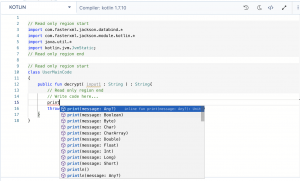

We are happy to announce that we have added support for version 1.7 of Kotlin on our general-purpose backend coding environment (CodeLysis).

This update comes with support for auto-complete and language support (IntelliSense) in Kotlin as well.

Clients will be able to select Kotlin 1.7 while creating or editing questions and test takers will be able to attempt coding questions in Kotlin 1.7 wherever available.

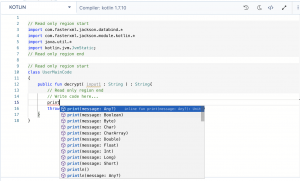

We are happy to announce that we have added support for the latest LTS (Long Term Support) version available for Java i.e., Java 17 on our general-purpose backend coding environment (CodeLysis).

This update comes with support for IntelliSense in Java 17 as well.

Clients will be able to select Java 17 while creating or editing questions and test-takers will be able to attempt coding questions in Java 17 wherever available.

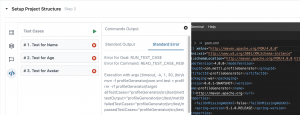

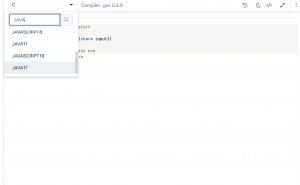

We are excited to announce a much-awaited feature to view the console output for “Run Test Cases” while creating project-based questions (I/O). This feature will allow access to logs and will further improve the overall debugging process involved in writing test cases while creating project-based questions questions.

In case there are multiple command sets and/or prerequisite goals, output for each of them will be displayed separately.

Know more

To understand more about Mercer | Mettl’s project-based development environment, click here.

We are thrilled to share that we have revamped the UI and UX of our Front-end Simulator (FES). The improved UI provides test-takers with larger area for writing codes and viewing the output.

The new UX brings along a walkthrough, highlighting the key components of the interface and features like auto code complete and language support (IntelliSense) to assist test-takers in writing faster and efficient codes.

Hope you are as excited as us to let test-takers take a feel of the all-new interface of our front-end coding environment!

In continuation with our enhancements on our general purpose backend coding environment (CodeLysis) w.r.t Plagiarism calculation, we have added specific reasoning for test-takers whose plagiarism score is tagged as N/A.

There are multiple reasons a test-taker’s plagiarism score can be tagged as N/A and hence, this enhancement will let users know the exact reason behind this particular tag.

Alongside this, accessing Mercer | Mettl’s documentation on Plagiarism calculation directly from the test-taker’s report is now just a click way.

Now you can choose to show Feedback Seeker’s details on the survey window!

As Feedback Providers fill the survey for multiple Feedback Seekers, it becomes a little difficult for them to remember for whom they were filling the survey. Especially because the survey windows can remain open in the browser windows for up to certain number of days.

For improved clarity, we have now provided an option to show the Survey and Feedback Seeker’s details in the survey window. This helps with an improved understanding of whom the feedback is being provided for and the correct feedback is provided for each individual without confusing them as someone else.

In the assessment level setting, a string using the available fields can be used to create a custom survey name which can be shown in the survey window. This string can be modified as required:

The string created in this case is – ‘Survey Name’ for ‘Seeker Name’ – ‘Seeker EmailId‘- .This is how it will appear in the survey window:

Stay tuned for more amazing updates coming your way.

You can now choose to show custom instructions before the survey starts!

For any survey, any instruction to be shown for the entire survey was either incorporated in the invitation email, or was put in the first section’s instruction. Now, the custom instructions option available at assessment level can be used to show the survey level instructions. This helps to provide a dedicated space to show these custom instructions. We also take a confirmation from the participants also before proceeding further that they have read and understood the instructions clearly.

Below is how the setting appears for the assessment linked to the survey:

This is where the Custom Instruction is shown, right after a successful system compatibility check:

Stay tuned for more amazing updates coming your way.

Now, upload an image of your choice for it to reflect as report’s cover page image!

You can now choose to have a cover page image of their choice. Images above a suggested dimension can be uploaded for improved customization of 360View reports. This takes us one step closer to further brand the client reports. Reports appear more personalized and identify with the customer’s brand as well.

The setting for this can be accessed at survey level under ‘Report Setup’:

After clicking on the option above, User can select an image of their choice and edit the image with the various options available. Various customization options such as ‘Logo positioning’, ‘Text color’ and ‘Text positioning’ have also been made available now:

Post saving the changes, the image appears as the selected layout for cover page:

This is how the custom image would appear on the report’s cover page, making the customers experience the WOW moment for the reports:

Stay tuned for more amazing updates coming your way.

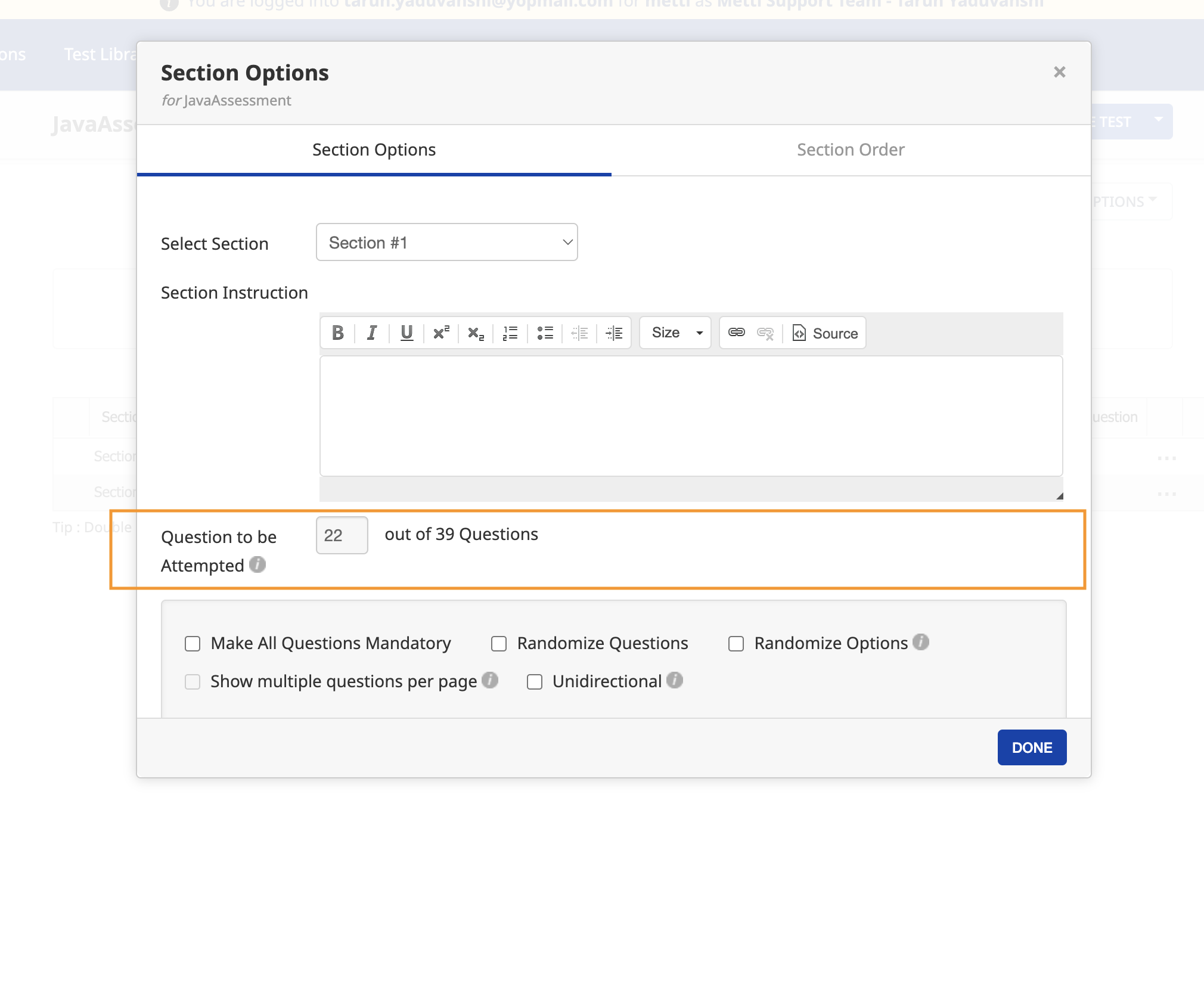

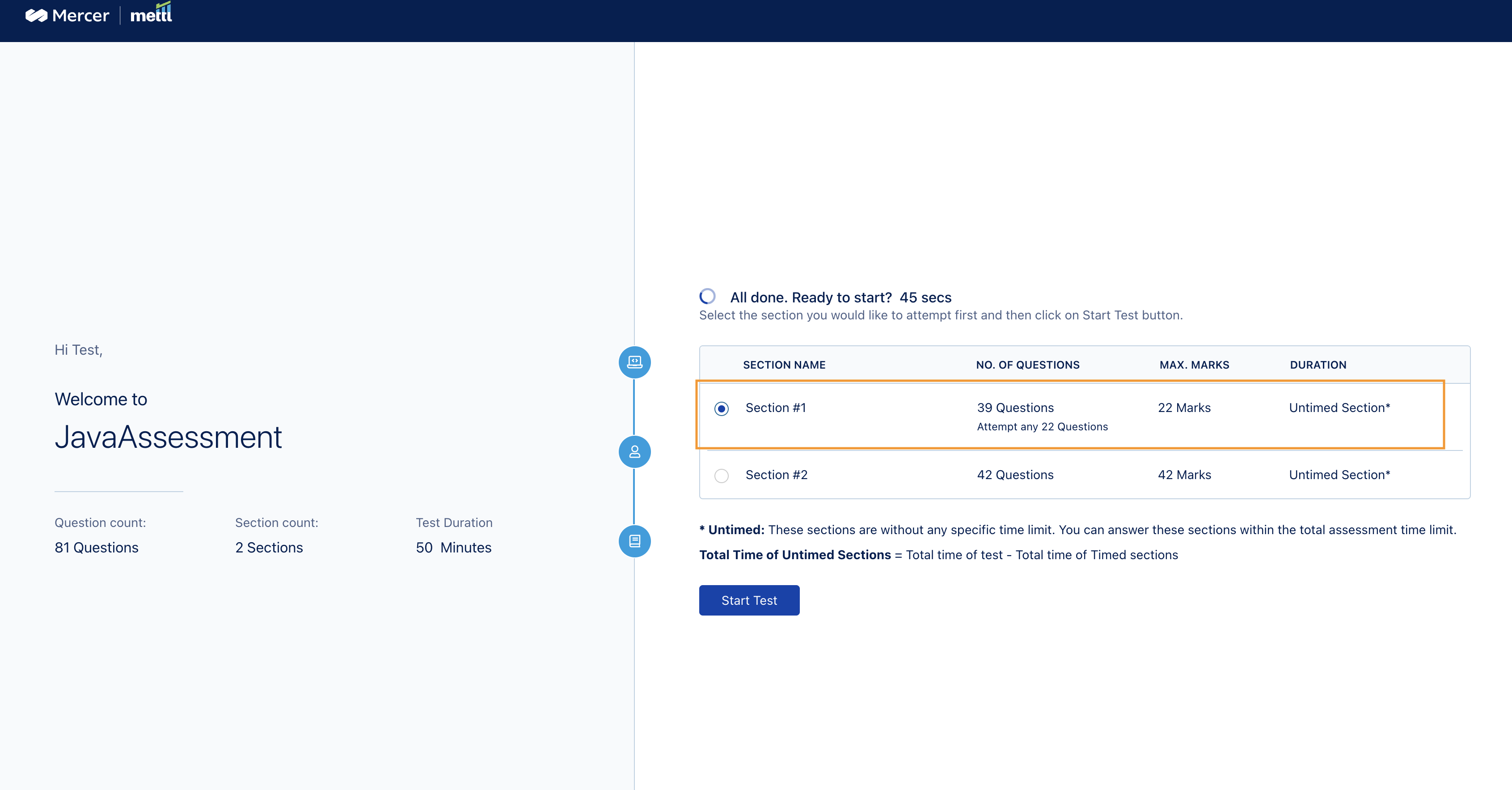

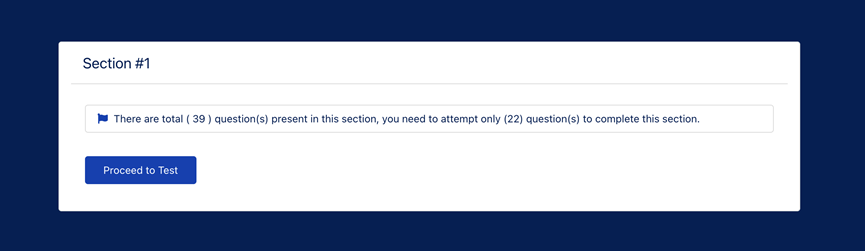

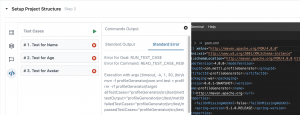

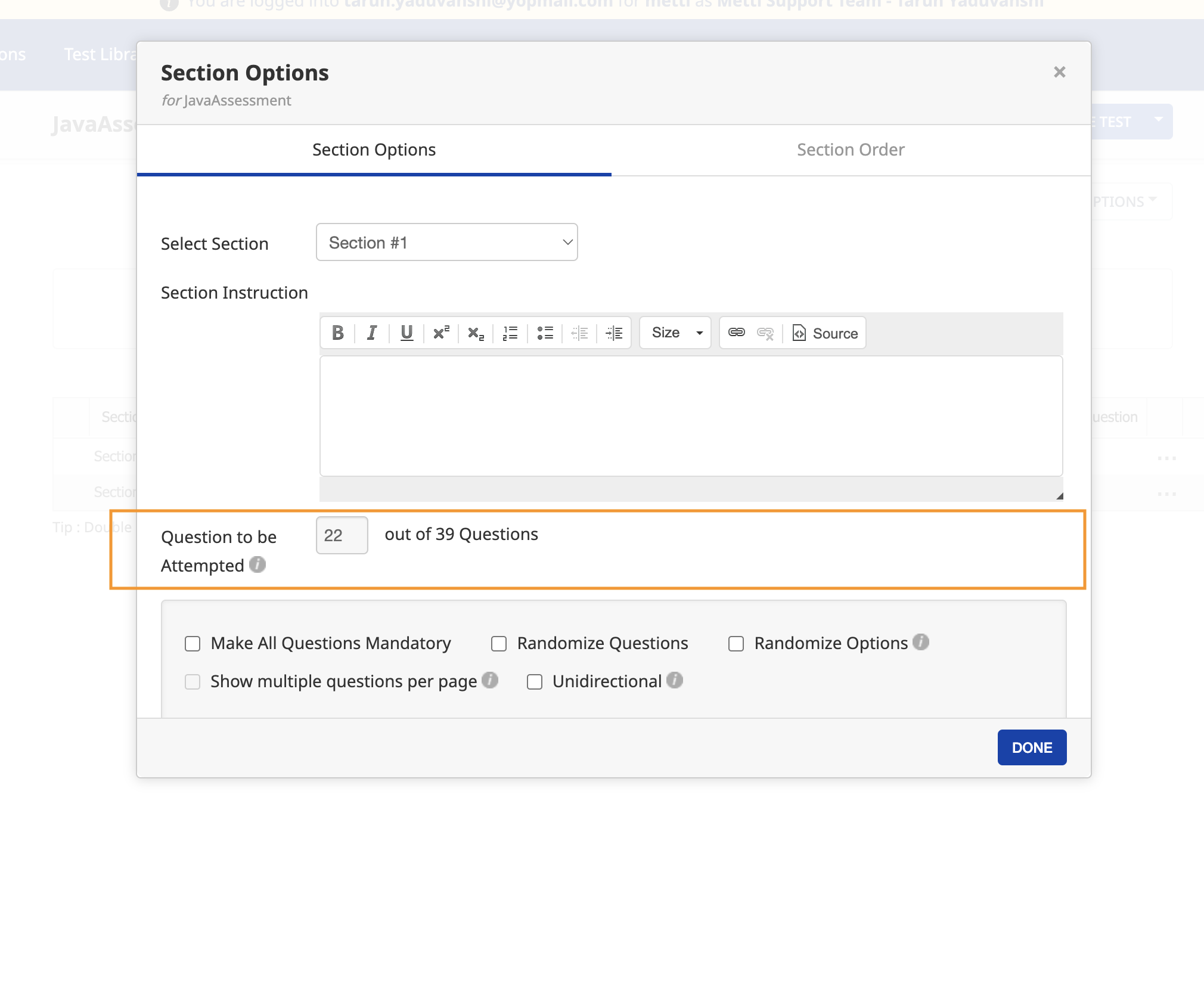

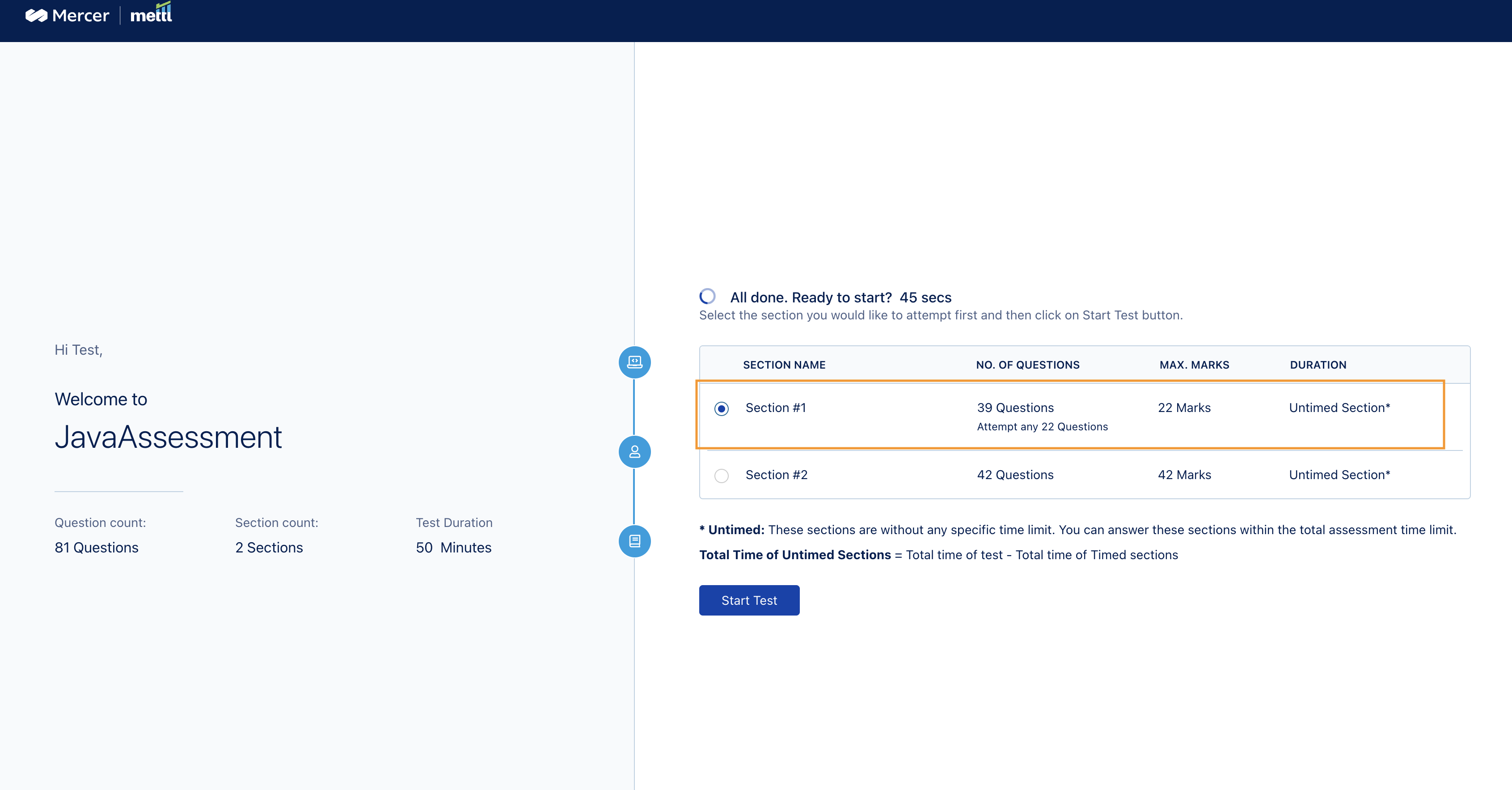

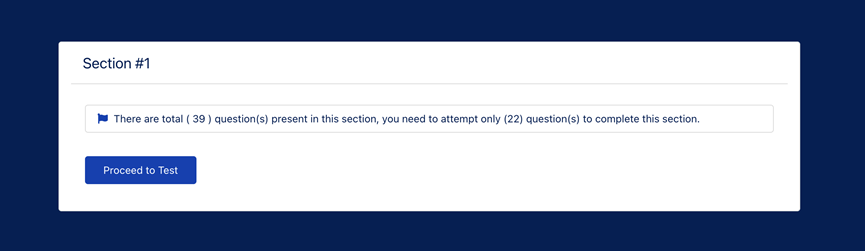

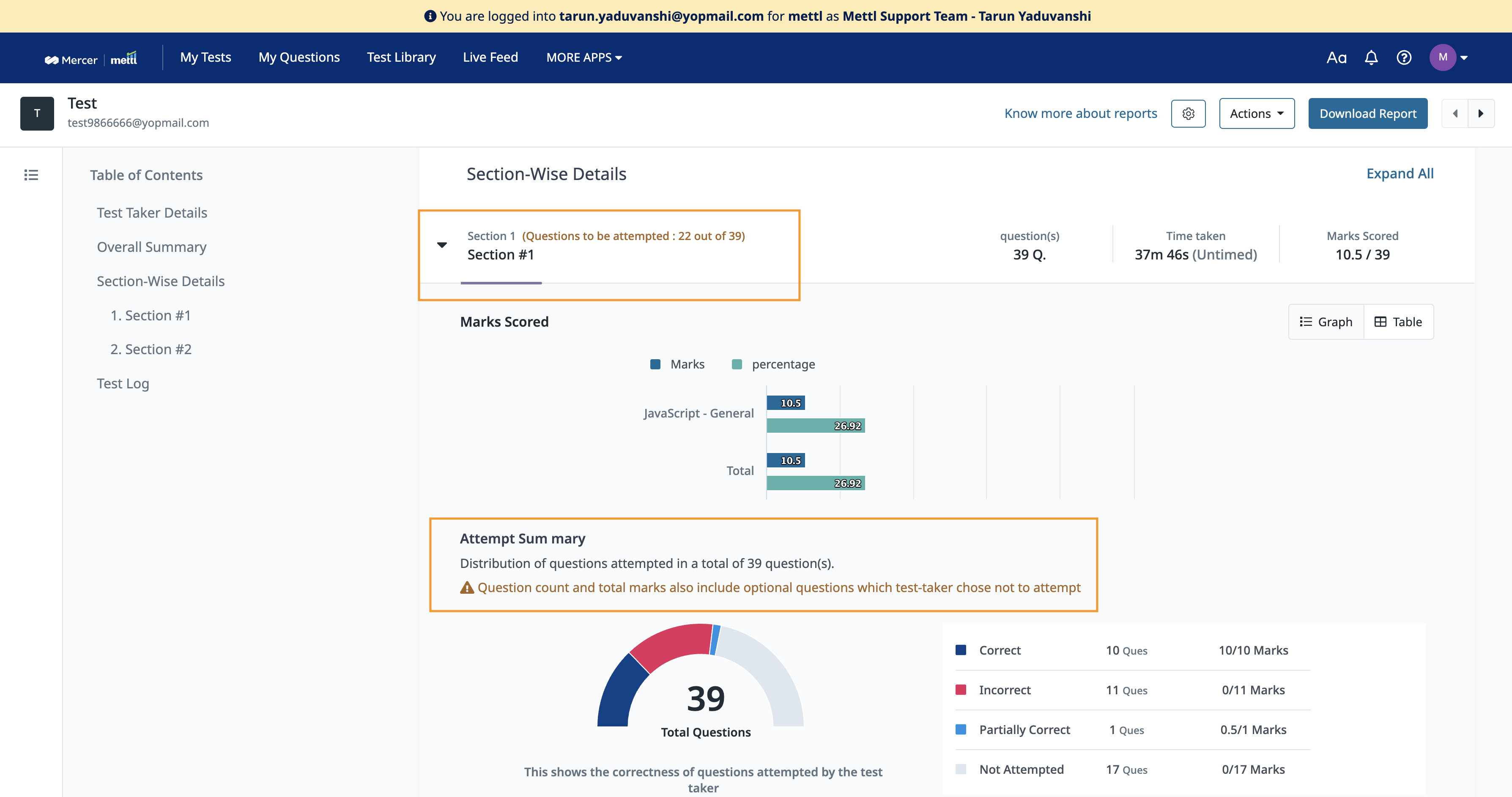

Now we have “Attempt any N question” feature at section level, wherein the test-taker has a flexibility to answer only N number of questions from a larger set of questions in a section. This brings us one step closer to replicate the offline world scenario of attempting only a certain number of questions from a pool of questions.

This setting can be enabled at section level:

In the test-taker window, we will show the below info to the test-taker before the test starts:

In the test window, we show this information in section instructions:

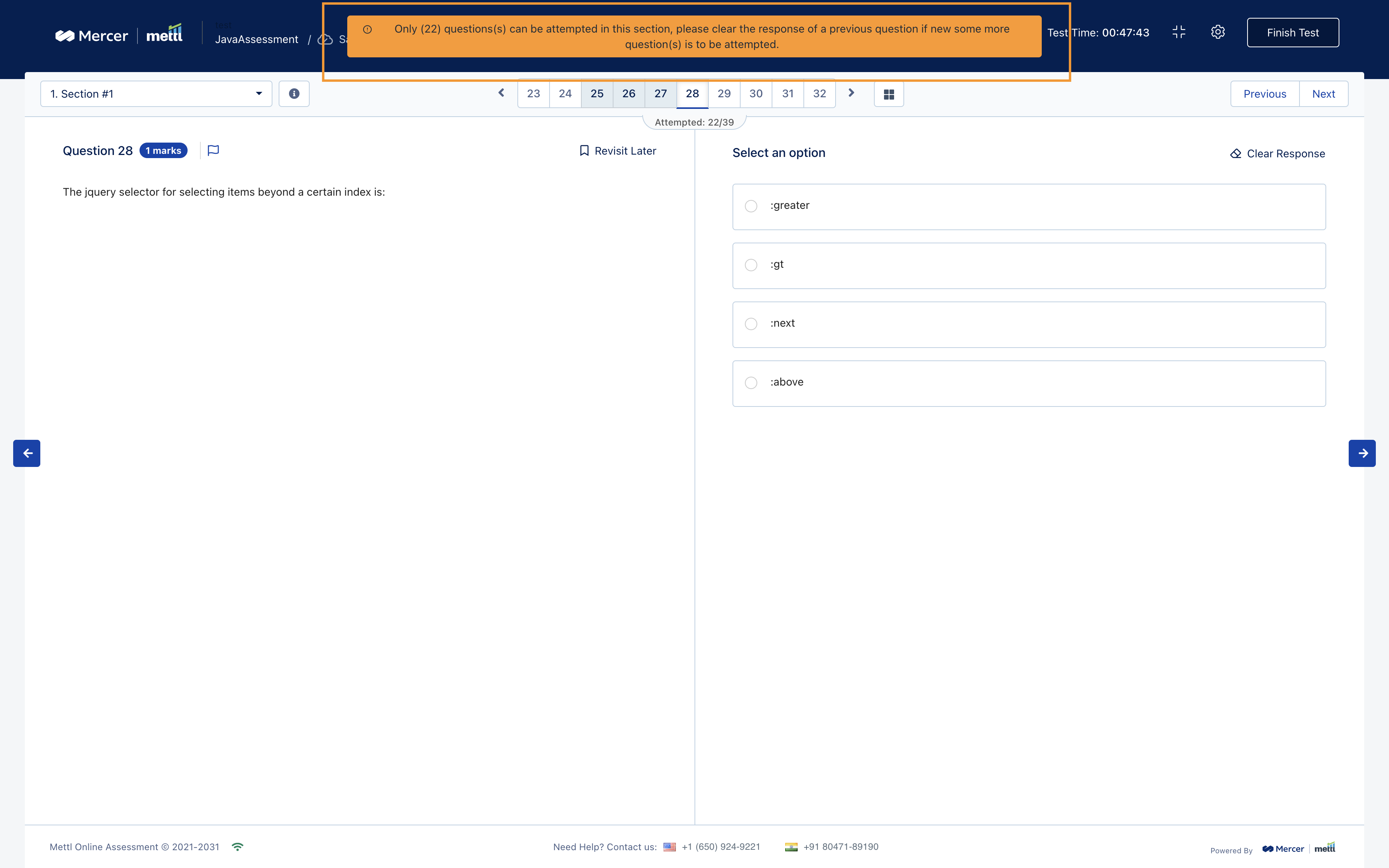

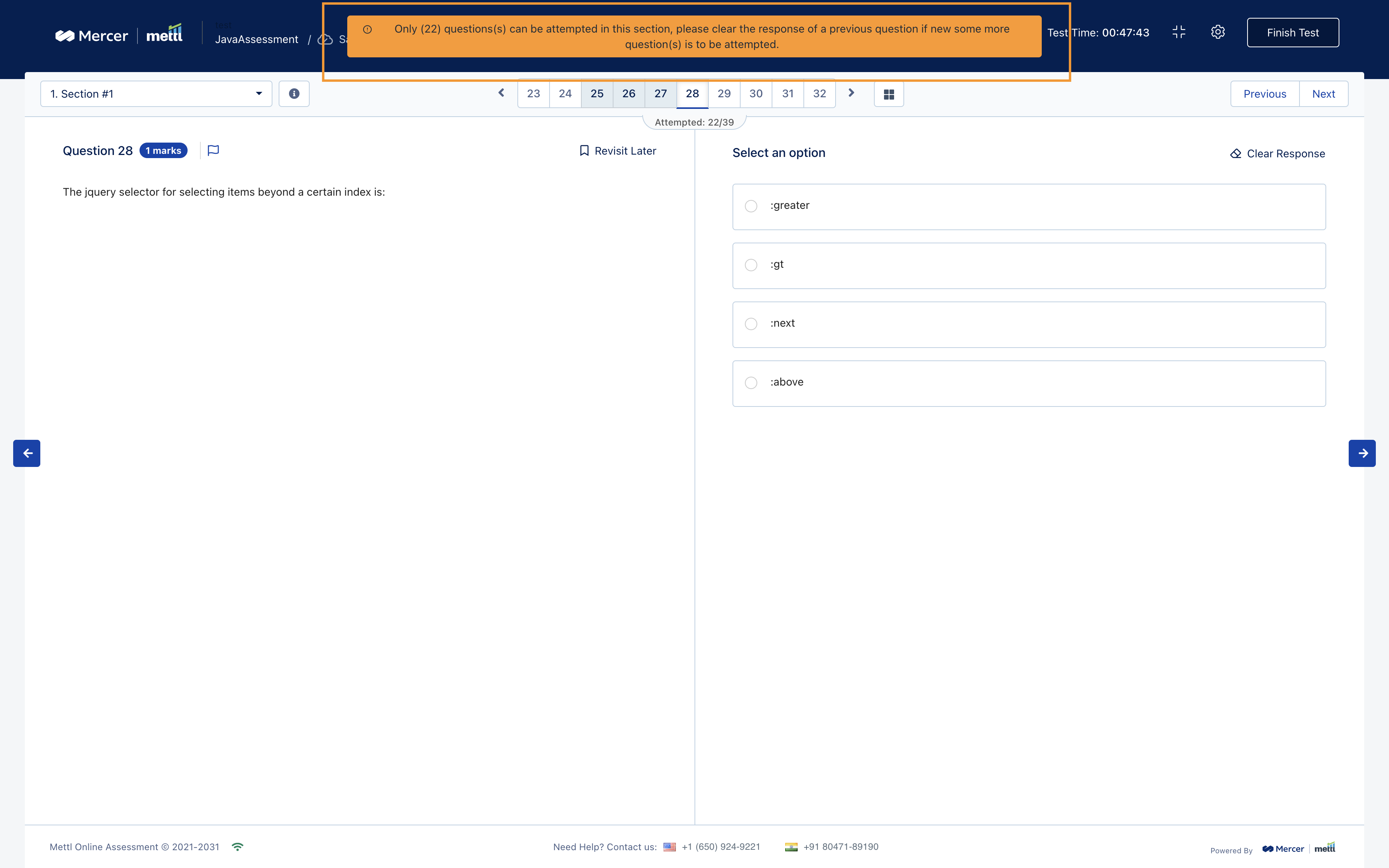

In test window, if test-taker tries to attempt more than N number of questions:

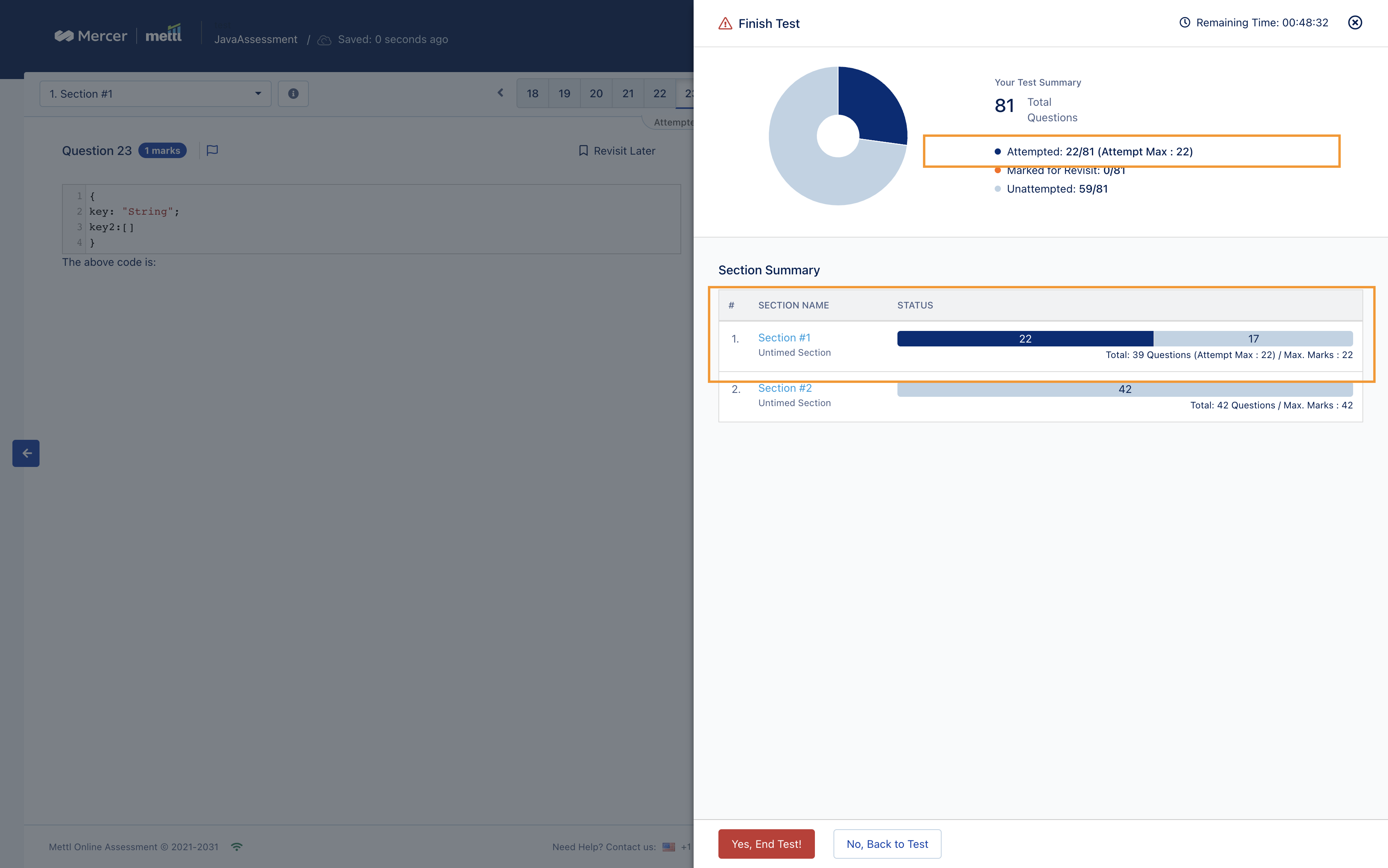

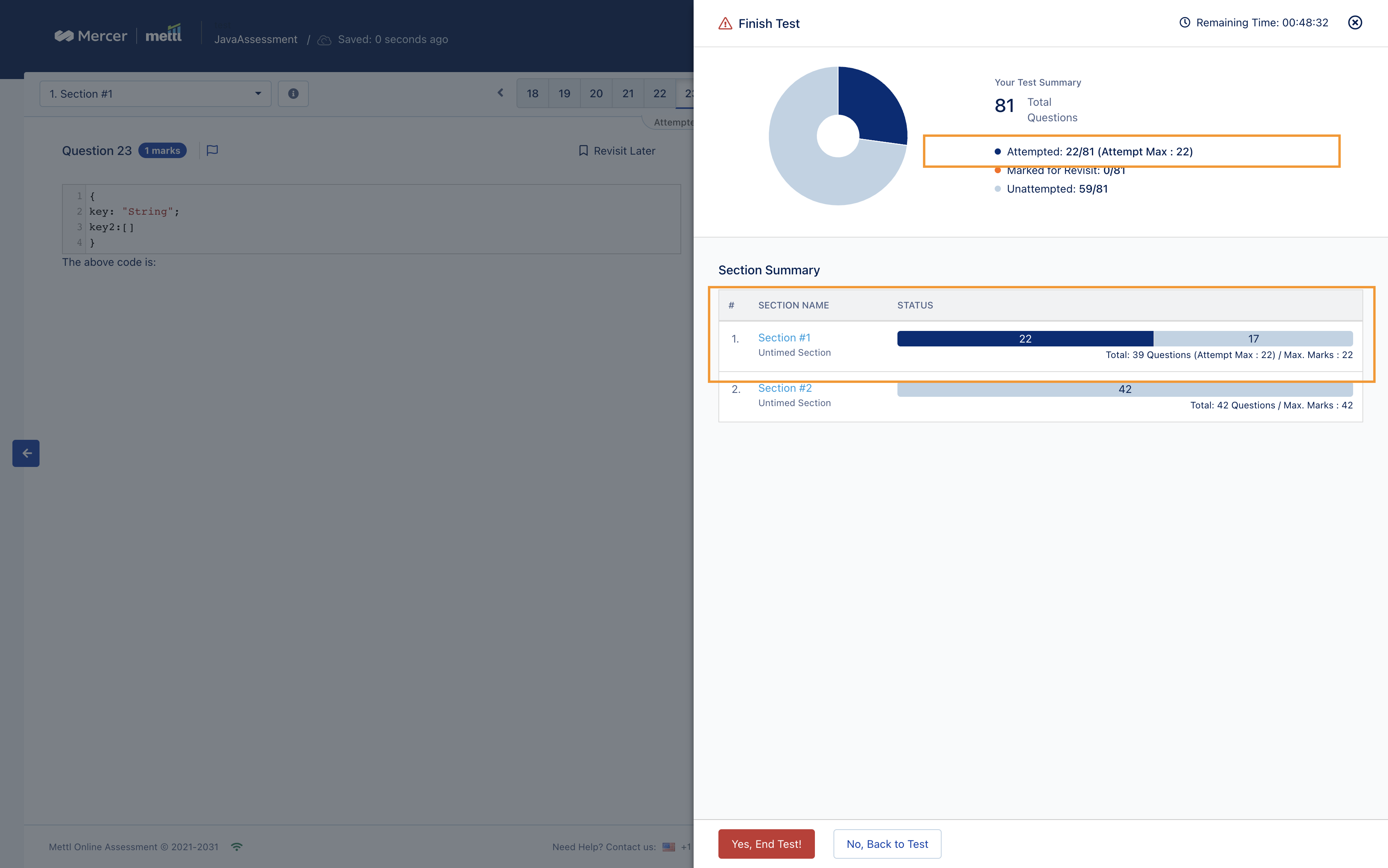

We show this info on the question attempt summary screen shown just before finishing a test:

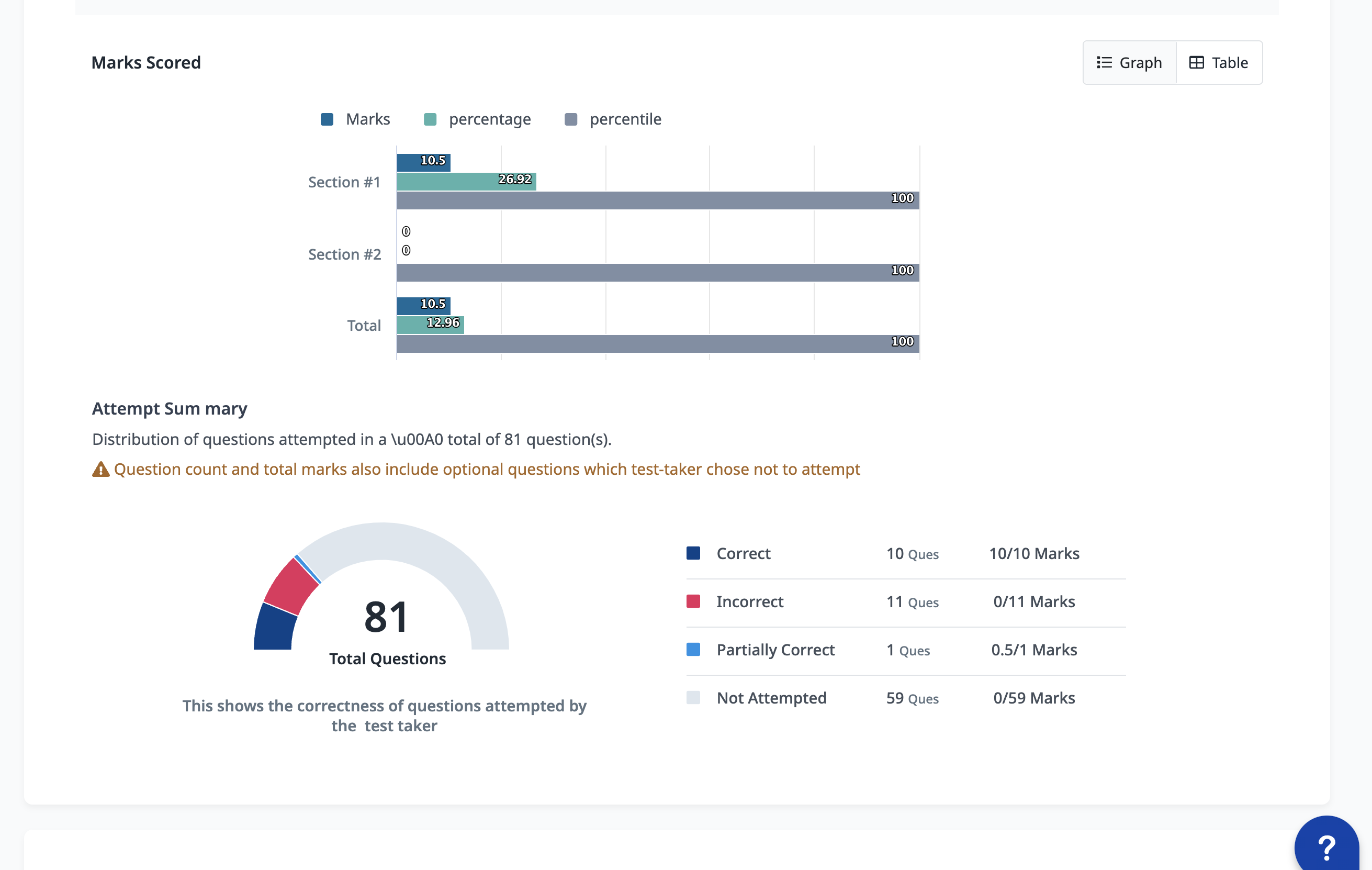

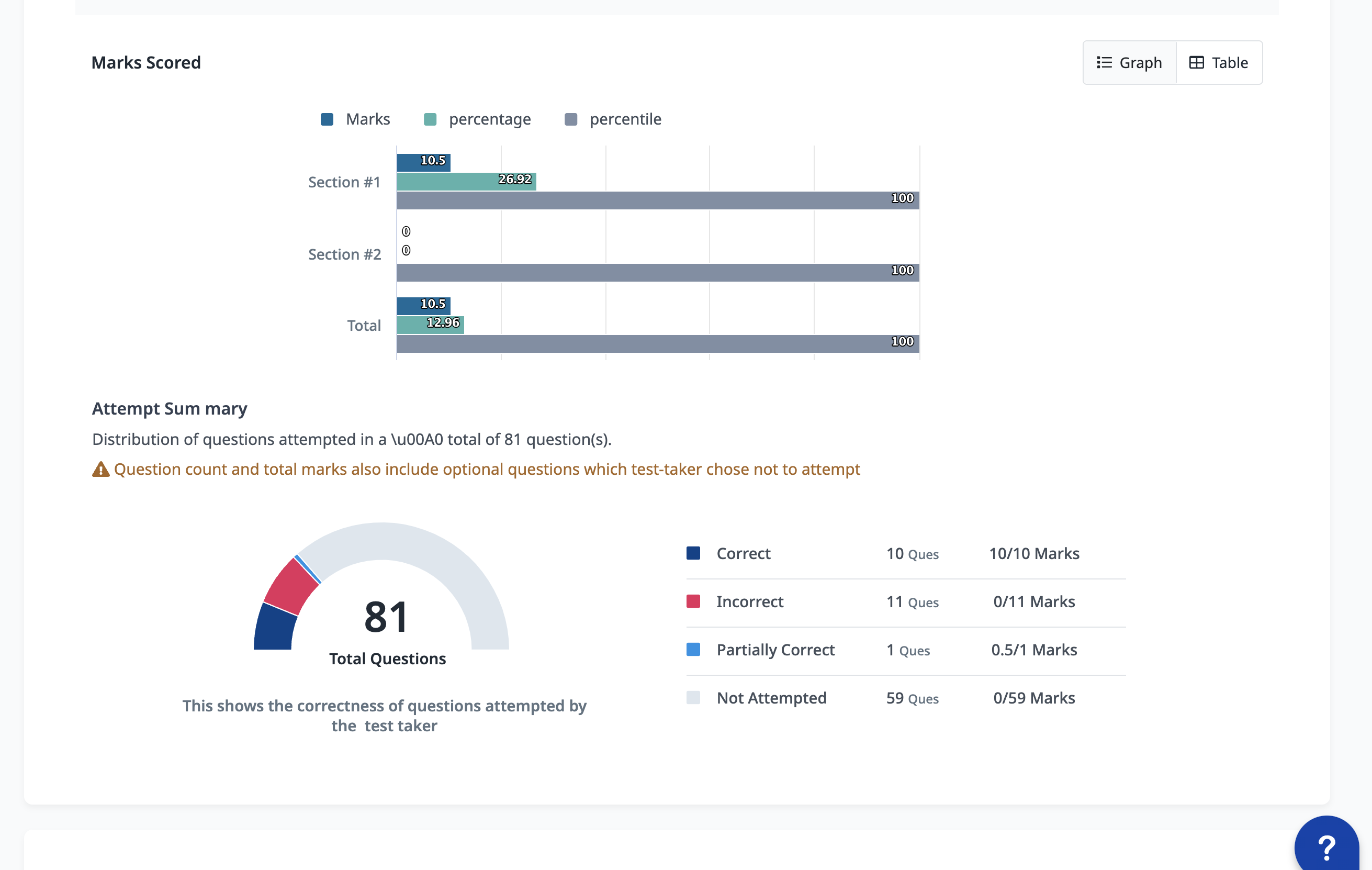

Reporting changes:

In Reports, the setting done for N Questions to be attempted i.e. the change in overall marks due to optional questions would be displayed at:

- Overall Summary level

- Section header in Section-wise details

- Section header in Question-wise details

Rest of the information (ex-difficulty index, bookmark, sectional graphs/tables) would be the same i.e the question to be attempted setting will not be applicable for the Total Marks and the Questions Count.

Attempt any N Question info at Overall Summary level

Attempt any N Question info at header in Question wise details and Section wise details:

Stay tuned for more amazing updates coming your way.

Behavioral Competencies

Behavioral Competencies Cognitive Competencies

Cognitive Competencies Coding Competencies

Coding Competencies Domain Competencies

Domain Competencies